All Things About Deployment, Governance, and Risk Management for Data and AI

AI Deployment, Governance, and Risk Management: The Continuum of Operational Data and AI

While massive investments are being made in Data and AI to extract business value, Deployment, Governance, and Risk Management remain the most underestimated pillars in the Data and AI transformation journey.

Deployment

When it comes to deploying Data and AI solutions, Bring DataOps, MLOps, and LLMOps into your deployment processes is critical. These practices extend DevOps principles—automation, monitoring, and continuous integration/deployment—into the data, machine learning, and generative AI domains.

What Are They?

- DataOps: A collaborative data management practice focused on improving the communication, integration, and automation of data flows between data managers and consumers.

- MLOps: A set of practices that automate and govern the lifecycle of machine learning models, including development, deployment, monitoring, and retraining.

- LLMOps: A specialization of MLOps tailored to large language models, covering prompt management, fine-tuning, evaluation, monitoring, safety, and responsible usage.

A Side Note: AIOps ≠ MLOps

If you’re in the Data and AI space long enough, you’ll hear about AIOps. Unlike MLOps, AIOps refers to the use of AI to enhance IT operations, such as monitoring, incident detection, and root cause analysis. It does not mean applying DevOps to AI.

AI Governance

AI Governance refers to the framework of policies, roles, technologies, and procedures to ensure AI systems are developed and used responsibly, ethically, and legally. It spans development, deployment, usage, and compliance with internal and external regulations.

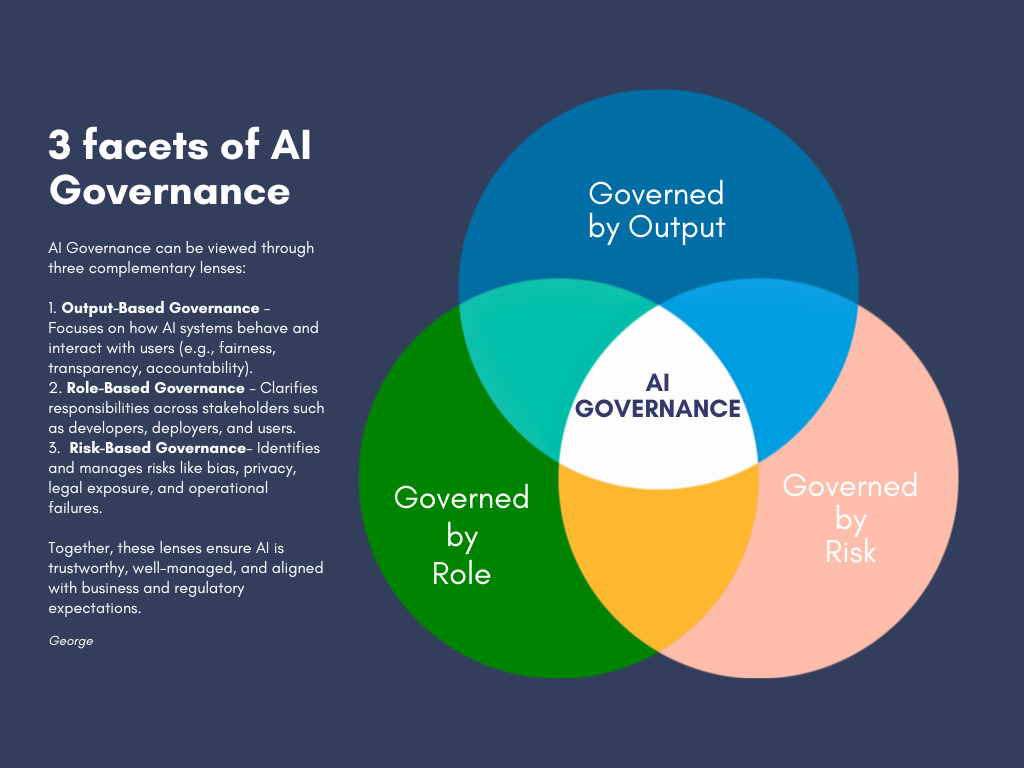

A Three-Lens AI Governance Framework

I suggest a 3 lens approach to manage your AI

- Output-Based:

- Autonomy: How independently can the AI system operate?

- Agency: Can we trust the AI to act on our behalf?

- Assurance: Are safeguards in place for safety, reliability, and fairness?

- Indicators: Metrics and benchmarks for monitoring performance.

- Interfaces: How do users interact with the system?

- Intentionality: Is the AI system behaving as designed?

- Role-Based:

- Developer: What ethical, security, and compliance factors to consider in development?

- Deployer: Responsibilities when integrating AI into operational systems.

- User: Guidelines for ethical and compliant use.

- Risk-Based:

- Harm: Define harm categories (e.g., MITRE PANOPTIC, CSET Taxonomy, Calo’s frameworks).

- Bias: Human and systemic biases, not algorithmic bias-variance tradeoff.

- Security: Data and model security.

- Privacy: Data protection and compliance.

- Legal Compliance: Laws like GDPR, PDPA, EU AI Act.

- Operational Risks: Failures, instability, misalignment with business intent.

3 lens of AI Governances Framework

3 lens of AI Governances Framework

AI Governance Framework Components

Designing a robust AI governance framework means building a system of guardrails that ensures AI delivers value safely, responsibly, and legally. Below are the critical components every organization must define clearly and align to their operating context:

1. Governance Policy and Standard

A formal document that outlines:

- Purpose: Why the organization needs AI governance.

- Scope: The types of AI systems, data assets, or business domains included.

- Guiding Principles and Standards: Commitments to fairness, transparency, explainability, accountability, and security and standards for Developer/Deployer/User to follow

- Alignment to Regulations: References to applicable global and local regulations (e.g., GDPR, PDPA, EU AI Act, AI Bill of Rights).

This policy is your north star, guiding all AI-related decision-making.

2. Governance Processes

AI governance must be embedded across the AI lifecycle through well-defined processes:

1. Development:

- Use Case Onboarding: Approval and prioritization criteria, including ethical and societal impact.

- Responsible AI by Design: Incorporate fairness, inclusivity, privacy, and transparency from the outset.

- Model Documentation (Model Cards): Include model purpose, training data, assumptions, limitations, and risks.

- MLOps/LLMOps Integration: Ensure automated testing, version control, deployment, and governance compliance.

2. Usage:

- Model Monitoring: Continuous tracking of performance, drift, and anomalies.

- Feedback Loop: Capture and act on end-user and stakeholder feedback.

- Human-in-the-Loop Controls: Identify scenarios where human review or overrides are mandatory.

- Training & Certification: Educate business and technical users on responsible AI usage and ethics.

3. Risk & Compliance:

- Risk Register: A central log of risks, mitigations, and ownership.

- Regulatory Mapping: Traceability of model features and processes to compliance requirements.

- Auditability: Ensure transparency through version control, logging, and model lineage documentation.

3. Operating Model

Define clear roles, responsibilities, organizational structure nd workflows for AI governance execution:

- Different operationg model: Common models include Centralized, Center of Excellence (CoE), and Hub-and-Spoke. The best choice depends on how your business is already structured. According to Conway’s Law, systems tend to reflect the communication structure of the organization that builds them. The same applies here—your AI governance model should align with how your teams and business units naturally work together.

- Key Roles:

- AI Governance Lead

- Model Owner

- Risk & Compliance Officer

- AI Ethics Committee / Review Board

- Responsibilities: Use a RACI matrix to define who is Responsible, Accountable, Consulted, and Informed.

- Workflow Integration: Align governance with project management and product development lifecycles.

4. Technology Stack

Leverage tools and platforms that support the enforcement of governance policies and monitoring of AI systems:

- Monitoring & Observability: Tools like Evidently AI, Arize, or Fiddler for drift and anomaly detection.

- Lineage & Cataloging: Track the origin and transformations of data/models (e.g., Unity Catalog, Collibra, DataHub).

- Access Management: Implement Role-Based or Attribute-Based Access Control (RBAC/ABAC).

- Audit Logging: Enable immutable, timestamped logs for all critical events.

- Bias & Explainability Tools: Use SHAP, Fairlearn, Lime, or similar to explain decisions and test for bias.

5. Data Governance

Your data governance is the foundation of trustworthy and scalable AI. AI Governance builds on it by introducing model-specific risk, ethics, and lifecycle controls.

- Data Quality Management: Define and enforce data quality rules, thresholds, and alerting mechanisms.

- Metadata Management: Ensure proper tagging, lineage tracking, and classification of all data assets.

- Privacy & Protection: Detect and protect Personally Identifiable Information (PII) via encryption, masking, and anonymization.

- Data Access Policies: Define clear role-based or policy-based data access rules and data usage purposes.

- Data Lifecycle Management: Govern how data is stored, processed, served, archived, and deleted across its full lifecycle.

- Data Governance Processes: Establish structured processes for data stewardship, issue resolution, and standards enforcement.

Factors to Consider in designing your AI Governance

- Organizational Size and Maturity

- Jurisdiction and Legal Context

- Industry-Specific Standards

- AI’s Strategic Importance to Business

- Risk Appetite

- Data Availability and Infrastructure

If Data and AI use cases are your spear, Governance is your shield. Without proper governance, the financial risk is huge:

- 💸 Data breaches cost companies $4.88M on average – Source

- 🚨 Real-world damage: 12 well-known AI and analytics failures

Responsible AI

Responsible AI is a subset of AI governance focused on fairness, transparency, security, privacy, inclusivity, and accountability. Tech providers emphasize its implementation across design, development, and deployment.

📘 Microsoft Responsible AI Guide

📘 AWS Responsible AI

Risk Management

Risk Management is a long-standing function in highly regulated industries like BFSI, Pharma, and Healthcare. As organizations adopt AI, risk governance must evolve.

Types of Risks to Consider

- Climate Risk: Generative AI models (especially LLMs) consume significant electricity—how does this impact ESG goals?

- Financial Risk: AI models affecting trading or pricing decisions.

- Operational Risk: Model instability, data pipeline failures, hallucinations.

- Business Continuity Risk: Over-reliance on fragile AI systems.

- Security Risk: Model injection attacks, prompt leakage, adversarial data poisoning.

Evolving Your Shield

Being data/AI-driven means upgrading your risk management. Risk professionals must develop AI literacy, not just for compliance, but to work collaboratively with developers and business users.

NIST AI Risk Management Framework

Segment Data Governance Guide Data Governance framework - The DCAM CDGC

How to Measure ROI from Your AI Governance Investment

Measuring the return on investment (ROI) for AI governance can be challenging but is essential to demonstrate its value. Focus on these three measurable areas:

- Risk Reduction

Quantify the impact of governance by tracking potential compliance and regulatory risks avoided, such as:- Estimated fines avoided (e.g., GDPR penalties up to €20 million or 4% of global turnover, EU AI Act fines up to €15 million or 3% of turnover)

- Number and severity of governance-related incidents or violations prevented

- Improvements in audit and compliance scores

- Time to Market Acceleration

Measure how governance frameworks reduce deployment delays by:- Comparing average time from development to production before and after governance implementation

- Tracking cycle times for compliance approvals and risk assessments

- Estimating business value gained from faster rollout of AI solutions, such as increased revenue or cost savings

- Operational Performance Metrics (Proxy Metrics)

Use operational indicators to capture improvements in AI system reliability and trustworthiness:- Number of detected and resolved AI failures, anomalies, or biases

- Reduction in incident frequency or severity related to AI models

- Enhanced system uptime and stability statistics

AI Governance Tooling

AI governance tooling is still nascent—unlike mature data governance tools like Purview, Dataplex, or Collibra, there’s no fully integrated solution yet. Here’s what’s currently available:

AI Registry:

All major cloud platforms offer model registries—Vertex AI (GCP), Azure ML, and SageMaker (AWS). These support versioning and lineage but lack key governance metadata like intended use, risk classification, and business ownership. Custom fields or external metadata stores are often needed.Audit Logs for Conformity Assessment:

GCP Cloud Audit Logs, Azure Monitor Logs, and AWS CloudTrail provide infrastructure-level tracking. However, they don’t capture model-specific events by default. To support conformity assessments, you’ll need to instrument your models and set up workflows using Cloud Functions, Azure Functions, or AWS Lambda.Model Cards:

AWS supports model cards via SageMaker, and GCP offers an open-source Model Card Toolkit. Azure seems like currently has no native support. In all cases, generating, storing, and displaying model cards still requires custom development.Commercial Tools (COTS):

- Credo AI shows strong potential for dedicated AI governance, but integration with your cloud and MLOps stack should be validated.

- Alation and Collibra are extending their platforms to include AI governance capabilities, but current offerings vary and may require configuration or customization.

English–Chinese Glossary

A quick translation of multiple english term to chinese

| English Term | 中文 |

|---|---|

| DataOps | 数据运维 |

| MLOps | 机器学习运维 |

| LLMOps | 大语言模型运维 |

| DevOps | 开发运维 |

| AIOps | 人工智能运维 |

| CI/CD (Continuous Integration / Continuous Deployment) | 持续集成 / 持续部署 |

| IT Operations | IT 运维 |

| Risk Management | 风险管理 |

| Climate Risk | 气候风险 |

| AI/Data Governance | AI / 数据治理 |

| Responsible AI | 负责任的人工智能 |

| Human-in-the-loop | 人类参与环节 |

| Explainability AI | 可解释性 |

| RACI | 责任矩阵 |

| Model drift | 模型漂移 |

Summary

While use cases and technologies often take center stage, Deployment, Governance, and Risk Management are critical for sustainable, safe, and trustworthy AI transformation. Ignoring them exposes your organization to operational, ethical, and regulatory risks.

You can’t say you care about AI safety without taking a hard look at your deployment practices, governance frameworks, and risk management capabilities.

3 lens of AI Governances Framework

3 lens of AI Governances Framework

References

- IAPP Certified AI Governance Professional (AIGP): Learn More

- Google, Microsoft, Databricks, AWS MLOps & Responsible AI documentation (linked above)

- CIO.com, Datarobot breach cost studies

- https://github.com/tensorflow/model-card-toolkit

- https://docs.aws.amazon.com/sagemaker/latest/dg/governance.html

- https://www.alation.com/solutions/artificial-intelligence/

- https://www.credo.ai/product